From GigaIO

The World’s First 32 GPU Single-node AI Supercomputer for Next-Gen AI and Accelerated Computing

AI and Accelerated Computing Challenges

Large Language Models (LLMs) and Generative AI heavily rely on extensive GPU resources. Conventional server setups limit GPUs to the physical constraints of the server’s casing. These fixed server designs often lead to underutilization of valuable and scarce accelerators. Moreover, networking these rigid GPU servers over traditional networks heightens latency, subsequently diminishing overall performance.

The Solution

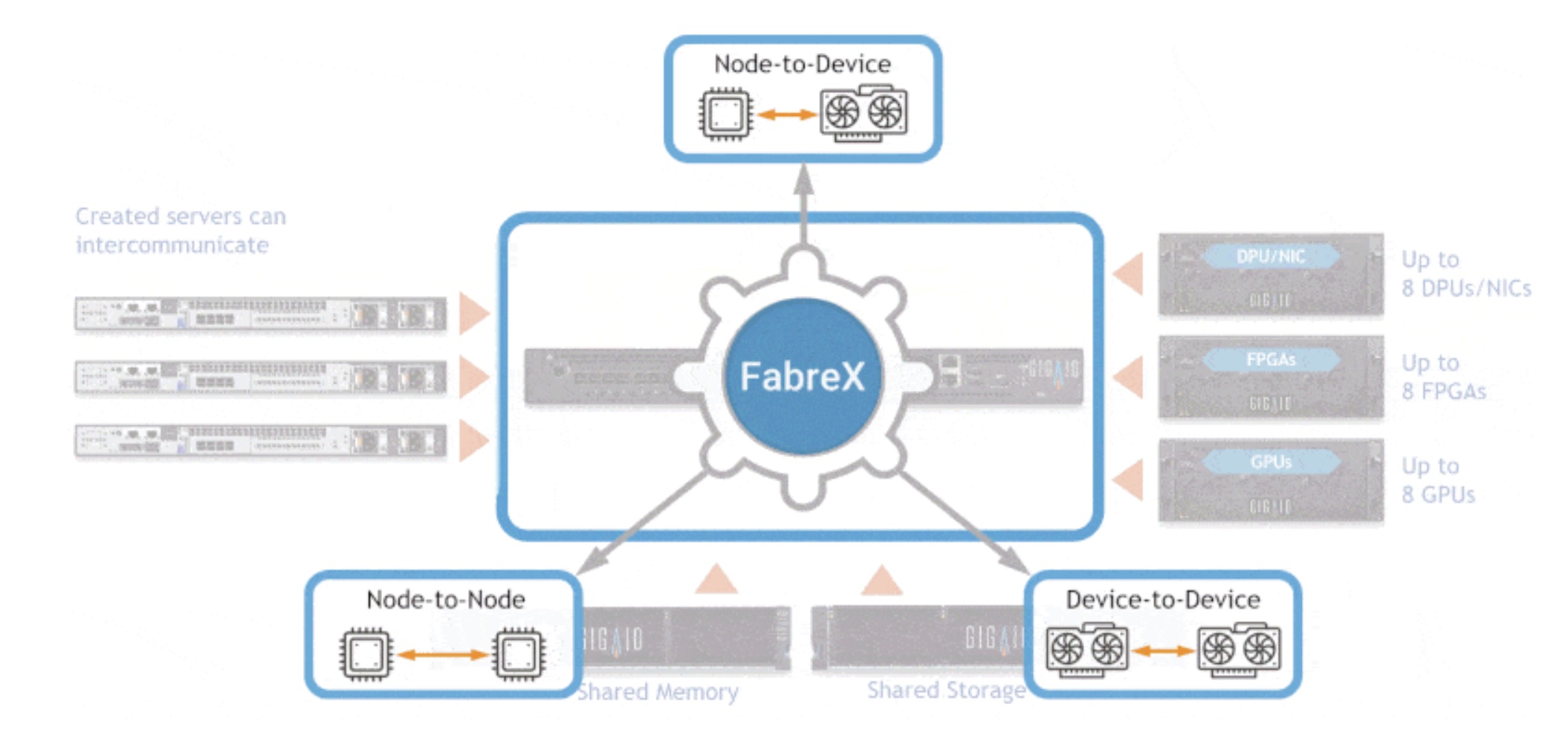

GigaIO Super NODE™ with FabreX™ Dynamic Memory Fabric links a maximum of 32 AMD Instinct™ MI210 GPUs or 24 NVIDIA A100s and up to 1PB storage to a readily available off-the-shelf server.

Facilitates energy-efficient, adaptable GPU supercomputing. Empowers training of extensive models using frameworks like PyTorch or Tensor Flow, scaling through peer-to-peer communication within a single node, eliminating the need for MPI across multiple nodes.

Accelerators can be distributed across multiple servers and scaled using node-to-node communication for handling larger datasets.

Benefits of GigaIO Super NODE

Reduce time-to-results significantly by harnessing substantial compute power on a single node. Simplify your workflow: utilize your current software seamlessly without any modifications – it seamlessly integrates. Attain unparalleled flexibility for any workload: unparalleled power in “BEAST mode,” adaptable configurations in “SWARM mode,” or shared resources in “FREESTYLE mode.” Opt for a single node solution to minimize network overhead, costs, latency, and server management. Decrease power consumption (7KW per 32-GPU deployment) and save on rack space (30% per 32-GPU deployment).

How it Works

The magic in FabreX is its ability to use PCIe as a routable PCI network that enables all server- to-server communication, rather than simply connecting resources to a single processor using a PCI tree. FabreX is the only PCIe-based routable network on the market, and uses Non- Transparent Bridging (NTB) to shatter the boundary between two PCIe hierarchical domains.

At its core, FabreX incorporates a construct for a memory address-based router with a built-in intelligence unit hosted in the switches that comprise the FabreX interconnect network. This routing mechanism virtualizes all hardware resources that consist of processor ecosystems and I/O devices as memory resources within a 64-bit virtual address space.

Communication between these resources consists of exclusively using the memory semantics of ‘Memory Read’ and ‘Memory Write’. This means you can compose servers and CPUs in the exact same way as you compose end points (GPUs, FPGAs, ASICs, Smart NICs — anything with a PCIe connection).

Unprecedented Compute Capability Available Now

Available today for emerging AI and accelerated computing workloads, the SuperNODE engineered solution, part of the GigaPod family, offers both unprecedented accelerated computing power when you need it, and the ultimate in flexibility and in accelerator utilization when your workloads only require a few GPUs.

Learn More

© 2024 Aurified Consulting. All Rights Reserved.